Abstract

Text-driven 3D scene editing has gained significant attention owing to its convenience and user-friendliness. However, existing methods still lack accurate control of the specified appearance and location of the editing result due to the inherent limitations of the text description. To this end, we propose a 3D scene editing framework, TIP-Editor, that accepts both text and imageprompts and a 3D bounding box to specify the editing region. With the image prompt, users can conveniently specify the detailed appearance/style of the target content in complement to the text description, enabling accurate control of the appearance. Specifically, TIP-Editor employs a stepwise 2D personalization strategy to better learn the representation of the existing scene and the reference image, in which a localization loss is proposed to encourage correct object placement as specified by the bounding box. Additionally, TIP-Editor utilizes explicit and flexible 3D Gaussian splatting (GS) as the 3D representation to facilitate local editing while keeping the background unchanged. Extensive experiments have demonstrated that TIPEditor conducts accurate editing following the text and image prompts in the specified bounding box region, consistently outperforming the baselines in editing quality, and the alignment to the prompts, qualitatively and quantitatively.

Table of contents:

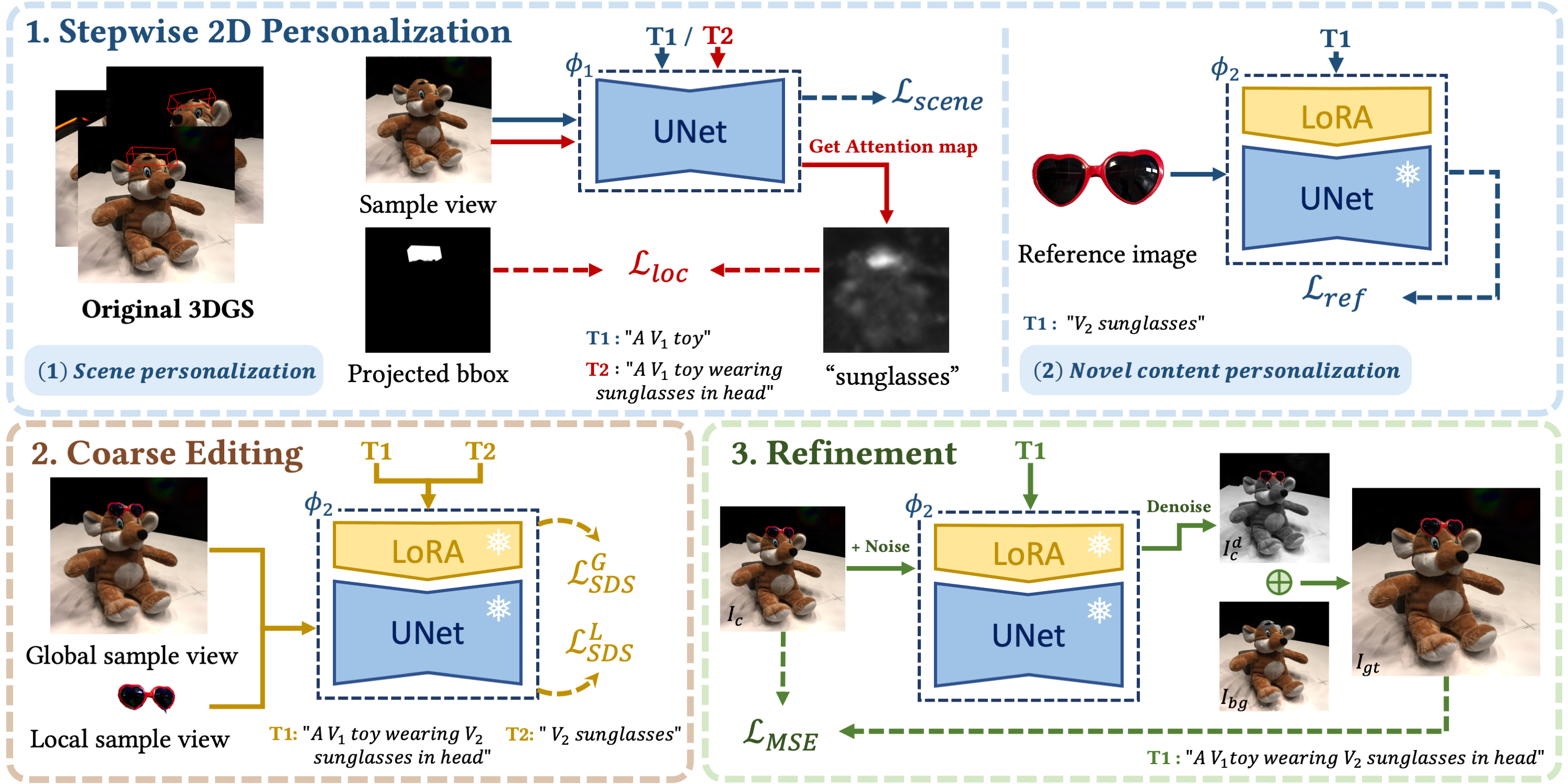

1. Framework of our TIP-Editor

Overview of our TIP-Editor:

TIP-Editor optimizes a 3D scene that is represented as 3D Gaussian splatting (GS) to conform with a given hybrid text-image prompt. The editing process includes three stages: 1) a stepwise 2D personalization strategy, which features a localization loss in the scene personalization step and a separate novel content personalization step dedicated to the reference image based on LoRA; 2) a coarse editing stage using SDS; and 3) a pixel-level texture refinement stage, utilizing carefully generated pseudo-GT image from both the rendered image and the denoised image.

2. Rendering videos of our editing results

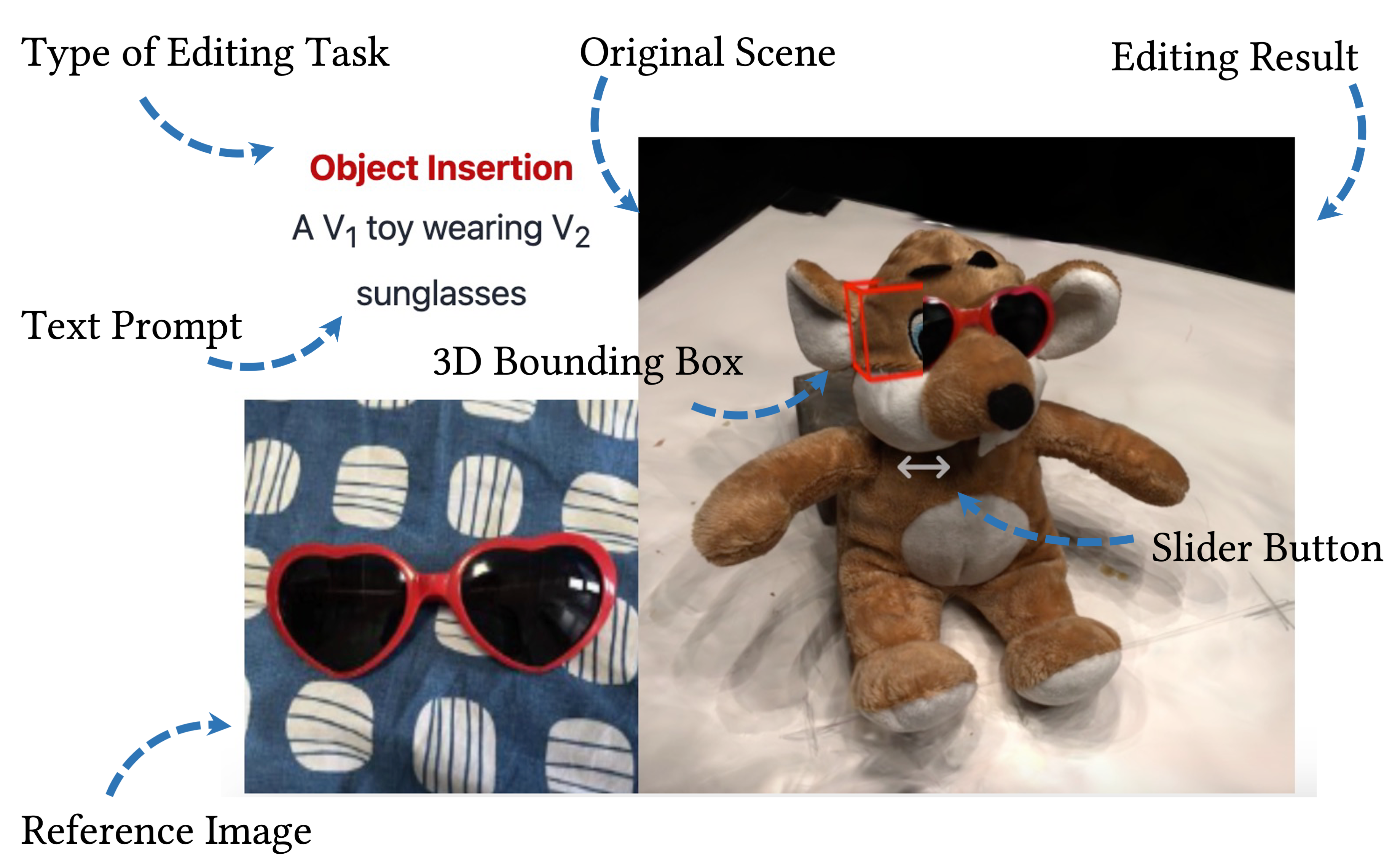

Our TIP-Editor supports different editing tasks:

- Object Insertion: this task involves the addition of a new object to the scene. Our method is able to define a specific object through a reference image and set the position of the object by setting a 3D bounding box.

- Whole Object Replacement: replacing a whole object within the scene to the specific object from the reference image.

- Part-Level Object Editing: altering the shape or texture of a part of an object.

- Sequential Editing: performing multiple editing to a same scene in sequence.

- Re-texturing: modifying the texture of an object while keeping the shape unchanged.

- Stylization: transforming the whole scene to the style of the reference image.

Explanation of Display Layout:

PS: Click to play/stop the videos, drag to compare results.

Scene 1: A plush toy on a table

A V1 toy wearing V2 sunglasses

A V1 toy wearing V2 sunglasses

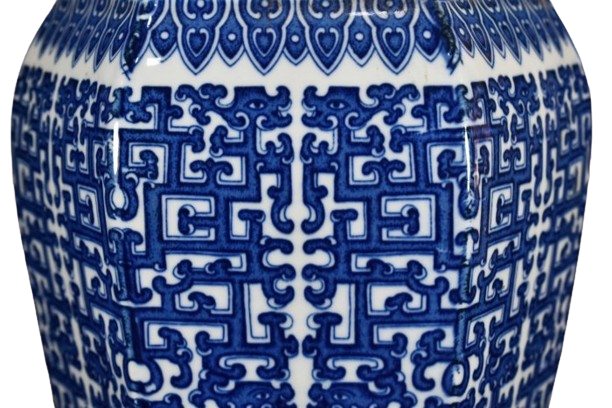

A V1 toy with V2 pattern

A V1 toy wearing a V2 hat

Scene 2: A white dog

A V1 dog wearing V2 sunglasses

A V2 cat

Scene 3: A human face

A V1 man with V2 beard

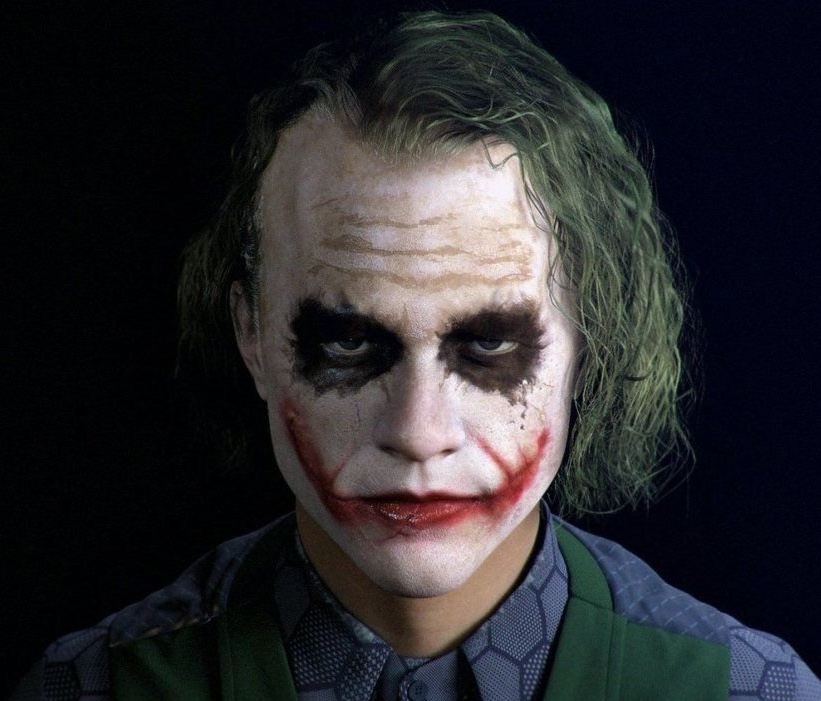

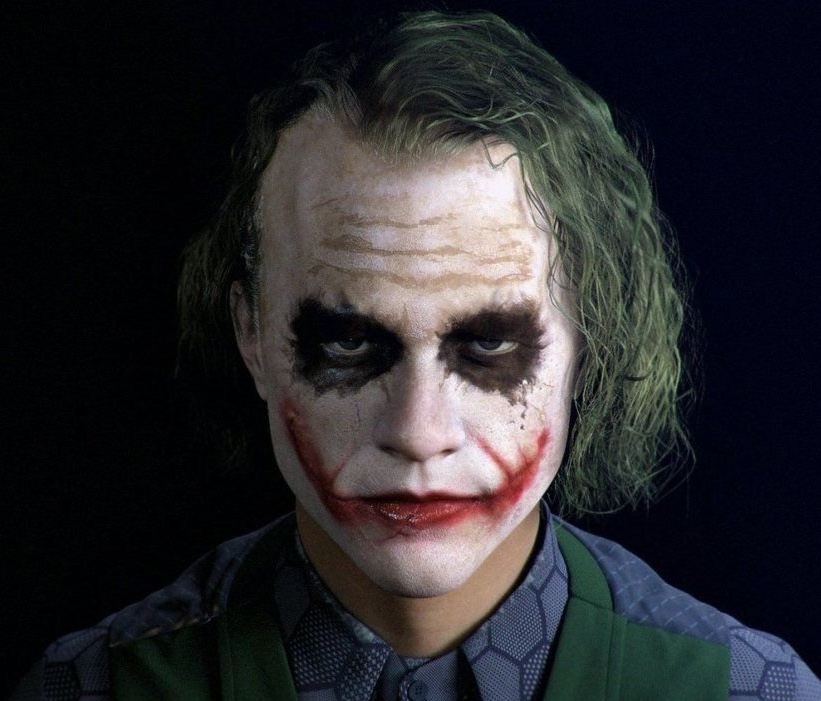

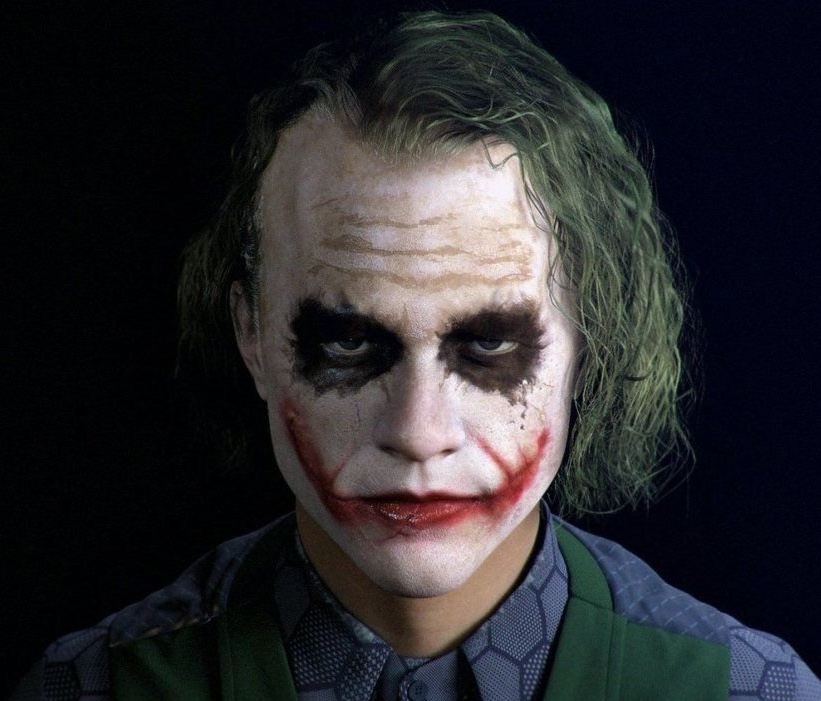

A V2 joker in a room

A V* man wearing a V2 shirt

A V** man with a V2 beard

A V*** man wearing a V2 hat

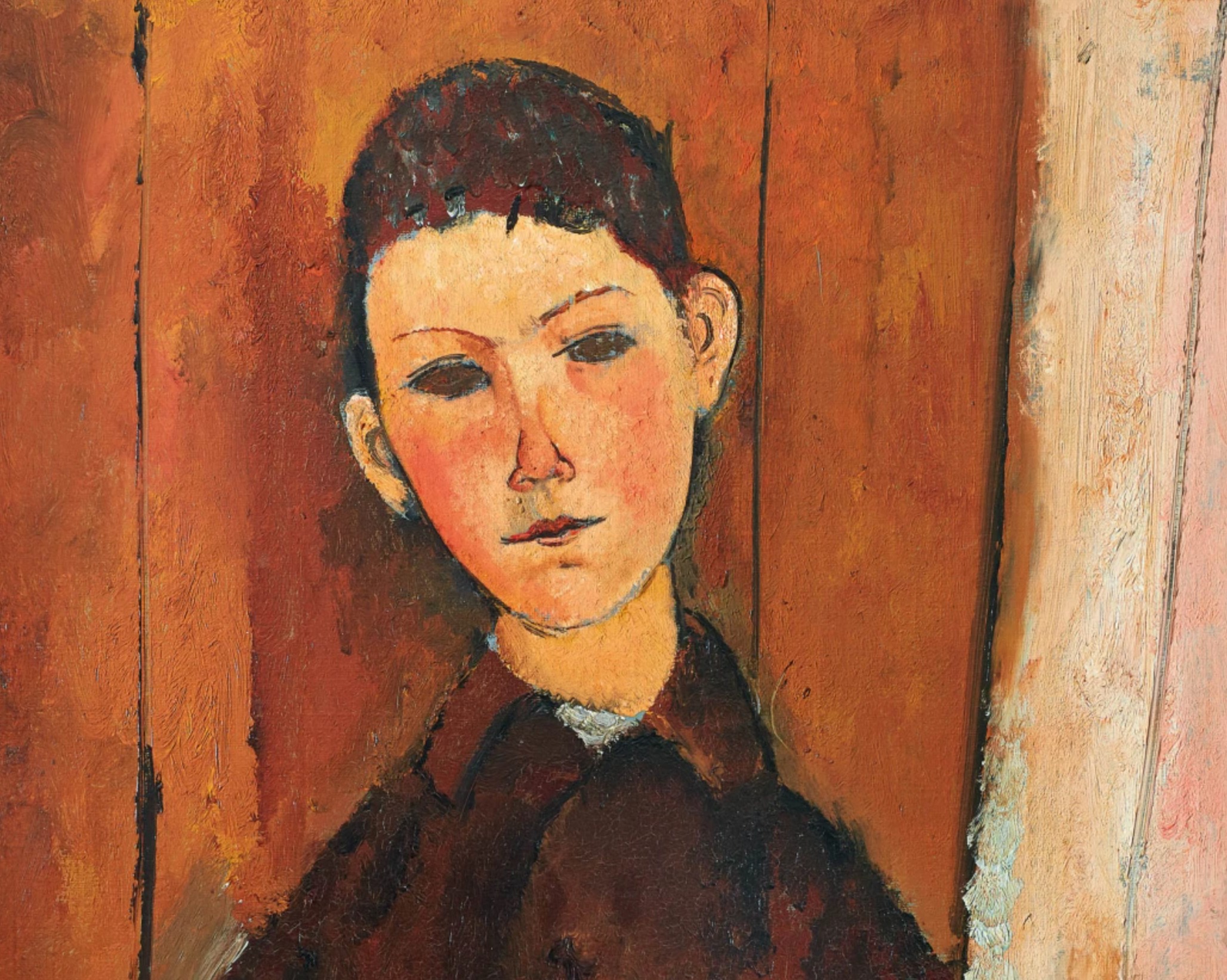

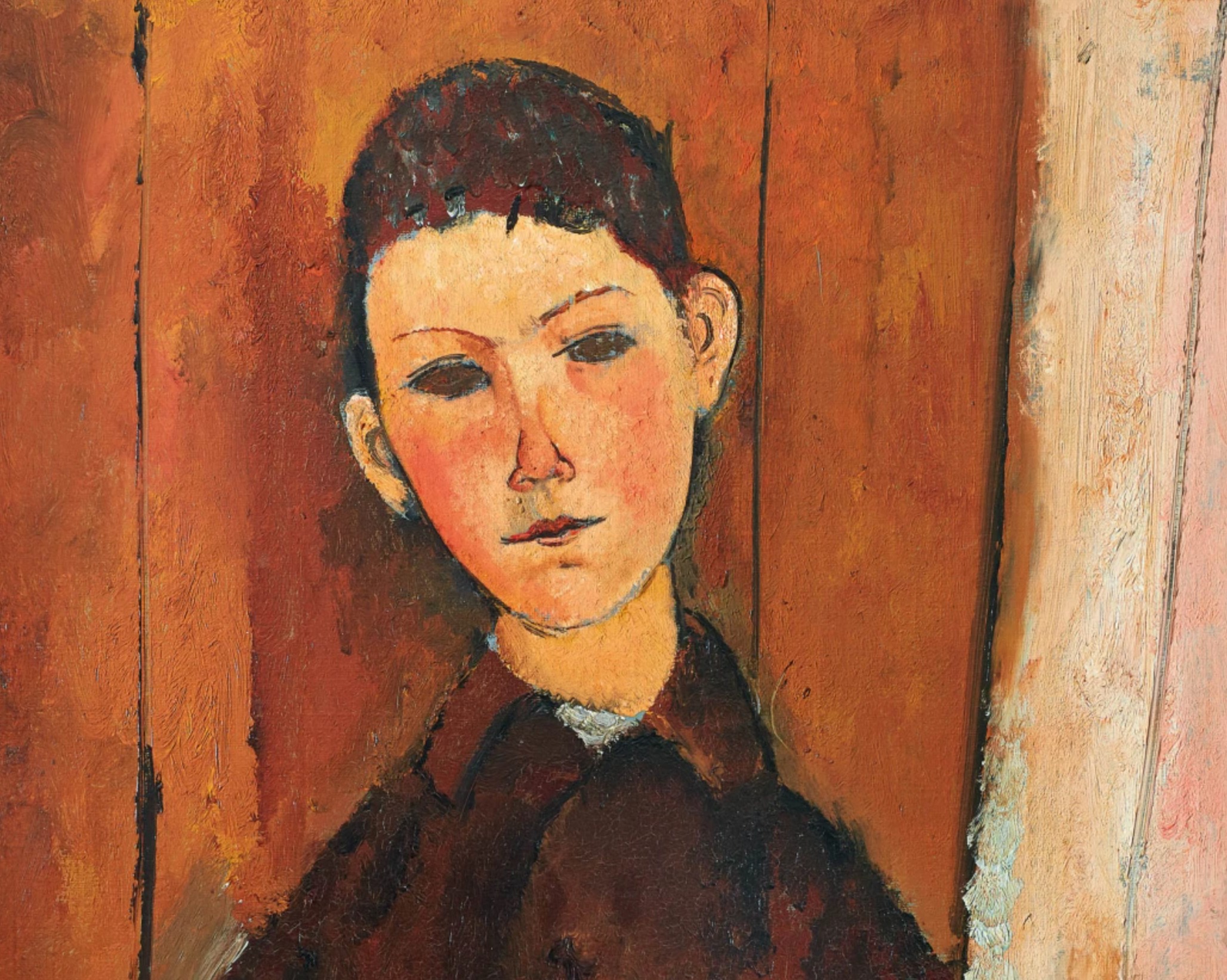

A V2 style painting of a V1 man

Scene 4: A horse sculpture in a garden

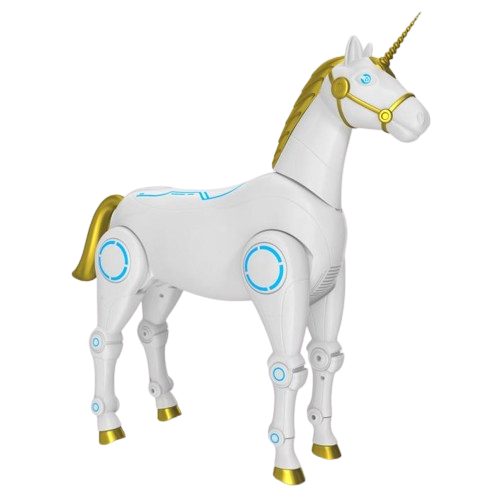

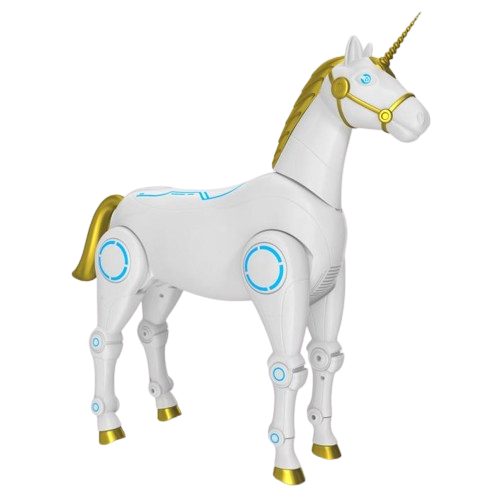

A V2 horse in a garden

A V2 horse in a garden

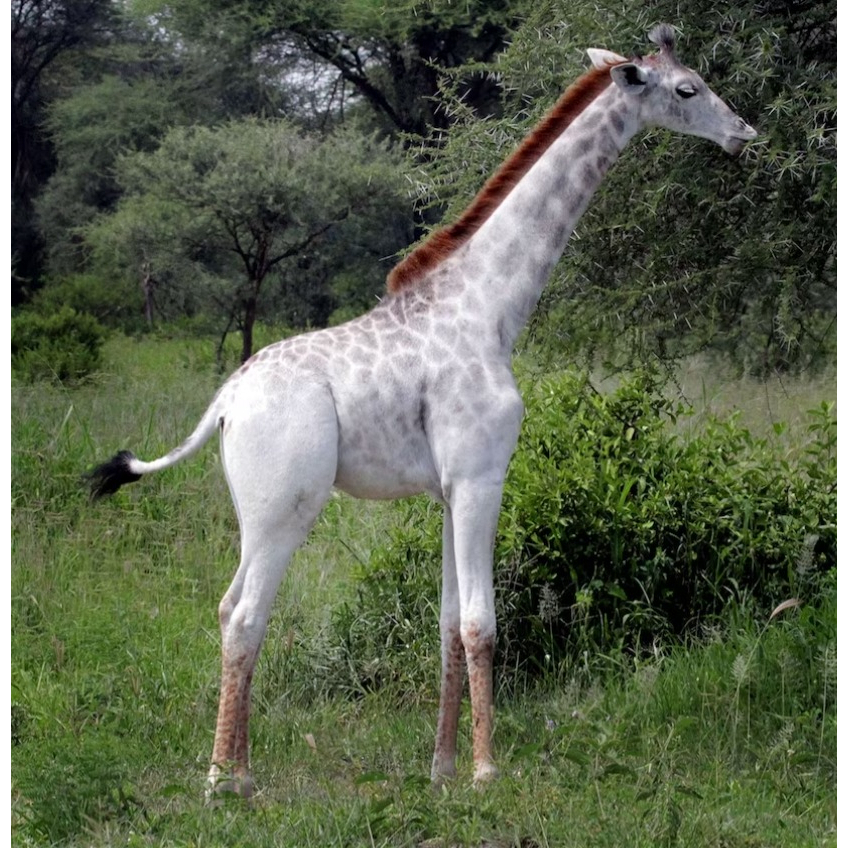

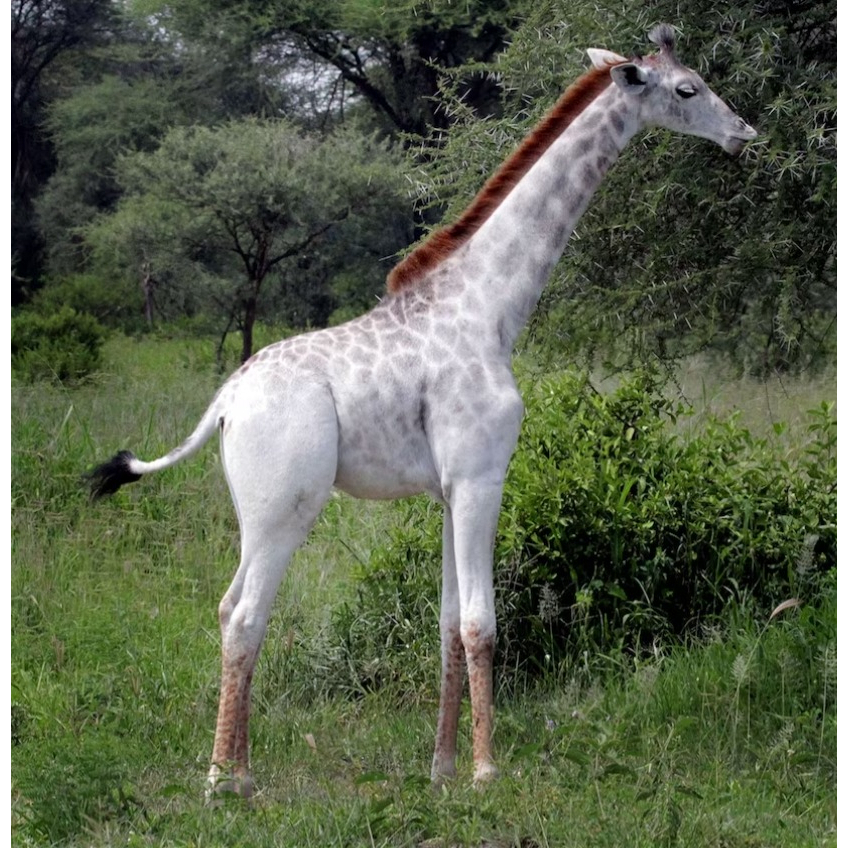

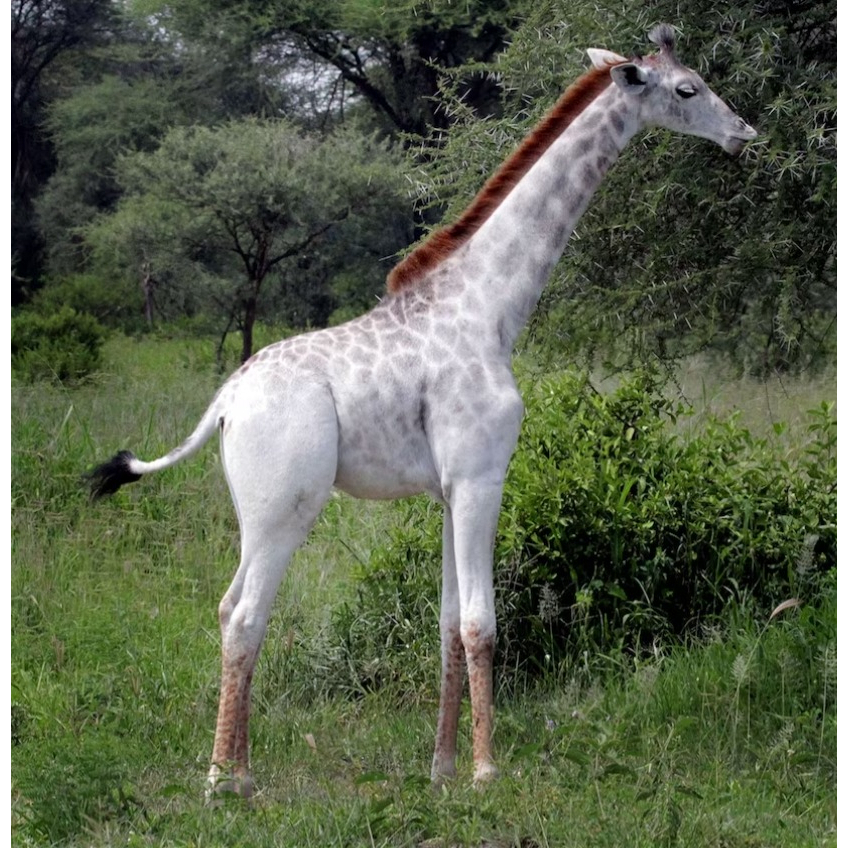

A V2 giraffe in a garden

A V2 giraffe in a garden

A V** giraffe wearing V2 sunglasses in a garden

A V*** horse wearing a V2 hat in a garden

Scene 5: A bear sculpture in woods

A V2 dog in woods

A V1 bear with V2 pattern in woods

Scene 6: An outdoor wood table

A V2 cake on a V1 table

A V2 apple on a V1 table

3. Ablation study results

a) Ablation studies on the stepwise 2D personalization

Results of ablation experiments to evaluate the impact of Lloc and LoRA Layers in the learning step (refer to Section 4.1).

A V1 toy wearing V2 sunglasses

b) Ablation study on different 3D representations

Results of different 3D representation methods for the same scene under the guidance of the same fine-tuned diffusion model.

A V2 giraffe in a garden

c) Effectiveness of the pixel-level refinement step

Comparison of the coarse editing results <- / -> the refinement results

A V1 toy wearing V2 sunglasses

A V2 giraffe in a garden

A V2 joker in a room

4. Comparisons with state-of-the-art methods

Due to the lack of dedicated image-based editing baselines, we compare with two state-of-the-art text-based radiance field editing methods, including:

- Instruct-NeRF2NeRF (I-N2N ): I-N2N utilizes Instruct-pix2pix to update the rendered multi-view images according to special text instructions obtained with GPT3. In our experiments, we use BLIP2 to obtain the textual description of our reference image and then convert it into a suitable instruction as in I-N2N.

- DreamEditor: DreamEditor adopts NeuMesh representation distilled from a NeRF and includes an explicitly attention-based localization operation to support local editing. For a fair comparison, we replace its automatic localization with a more accurate manually selected editing area.

A V1 toy wearing V2 sunglasses

A V1 toy wearing V2 sunglasses

A V1 toy wearing a V2 hat

A V2 giraffe in a garden

A V2 horse in a garden

A V2 horse in a garden

A V2 joker in a room

A V1 man wearing a V2 shirt

A V1 man with a V2 beard

A V2 style painting of a V1 man